Facebook employs artificial intelligence to prevent suicide

Photo courtesy of Shay Carlson

SHAY CARLSON, STAFF WRITER

Just a year after the introduction of Facebook Live, the social media site announced earlier this month that it will now use artificial intelligence algorithms to spot and hopefully prevent suicide or self-harm, particularly in the Livestream platform.

Spurred by the death of a 14-year-old Miami, FL girl who live-streamed her suicide over Facebook in early January of this year, CEO Mark Zuckerberg released a statement stressing the importance of early detection and preventative measures to be implemented on Facebook.

“There have been terribly tragic events —like suicides, some live streamed— that perhaps could have been prevented if someone had realized what was happening and reported them sooner,” wrote Zuckerberg.

Previously Facebook relied on users’ friends to report concerning content via a button that flagged the content for Facebook’s human review team. The beginning of this month ushered in the implementation of easy-access preventative help tools on Facebook Live and the Messenger app in the hope that users will now find it easier than ever to report concerning content on Facebook.

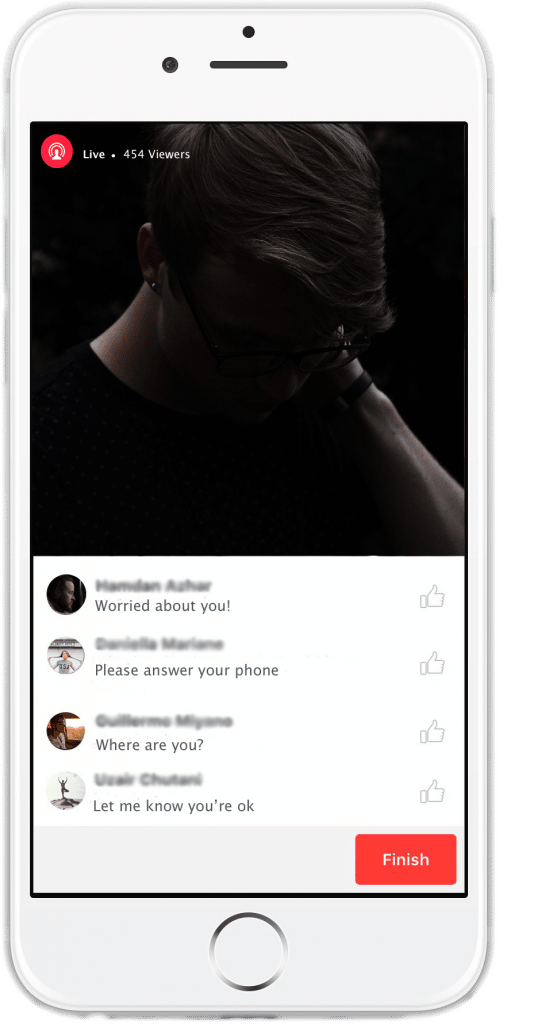

Artificial intelligence (AI) will also be fully implemented on the U.S. site to monitor users’ content and flag anything that looks suspicious for the Facebook human review team. AI has been trained on content that was previously flagged or spotted as being “at risk”; such as posts where the user is sad or in pain and their friends respond or contact them with phrases like “Are you ok?” and “I’m worried about you.”

The implementation of the Facebook Live help menu will allow viewers to express concern over a live stream, where the identified post will then be sent to Facebook’s rapid review community operations team, where they can then send a message to the broadcaster during the live stream offering help through a variety of helplines and websites.

Facebook product manager Vanessa Callison-Burch told the BBC “We know that speed is critical when things are urgent”. Willa Casstevens, an associate professor at North Carolin State University who studies suicide prevention expressed praise for the new live stream tool saying “In the moment, a caring hand reached out can move mountains and work miracles.”

Critics of the new measure are worried that the integration of AI will do little prevention since phrases like “kill myself’ and “going to die” are so often used colloquially on social media. “It’s hard for algorithms to do a good job of distinguishing [colloquialisms] from situations where someone really means it” said Joe Franklin, assistant professor at Florida State University in an interview for technologyreview.com. Others also criticized Facebook for not completely shutting down a reported live-stream or removing the content immediately when it’s reported.

Facebook’s lead researcher on the new preventative measure, Jennifer Guadagno explained “Cutting off the stream too early would remove the opportunity for people to reach out and offer support.”

While many are hopeful that this new step for prevention will make a difference in the second-leading cause of death among 15-29-year-olds, others are worried that Facebook is taking a step too far into user’s privacy.

“Going forward, there are even more cases where our community should be able to identify risks related to mental health, disease or crime,” wrote Zuckerberg in his statement in January.

Regardless of how users may feel about the new prevention measures, it looks like AI is going to be an integral part of social media in the years to come and will play a vital role in shaping how users use and view social media.